The Effect of Cobots on Worker Productivity

January 29, 2024

The Effect of Cobots on Worker Productivity

January 29, 2024Video: eyesfoto / Creatas Video via Getty Images.

One of the main selling points of collaborative robots is their ability to work safely near or even in conjunction with people. However, being told that a cobot is safe to work with and actually working side-by-side with a moving, whirring machine are two different things.

Would workers accept a cobot as just another co-worker? Or would they feel intimidated by the sight and sound of the machine? We set out to answer that question.

Our study looks at how the behavior of cobots might affect the efficiency of workers and the assembly process. Specifically, we sought to answer the following questions:

- How does the cobot’s motion parameters, such as speed and acceleration, affect efficiency?

- How does the sound of the cobot affect efficiency?

- What is the optimal design of a collaborative workstation?

New research aims to show how the sound level of a cobot at different speeds and accelerations affects the feelings and performance of workers on an assembly line. Source: University of Maribor

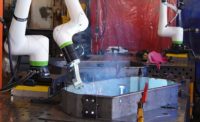

For our study, we created a collaborative workstation in a laboratory environment. The workstation consisted of a UR3e cobot from Universal Robots; a 2F-85 collaborative gripper from Robotiq; a worktable; a switch with green and red signal lights; and a signaling button.

The researchers designed an application in which a person and a cobot work together to assemble a finished product. The product consists of two Lego Duplo 2x2 bricks (subassembly 2) and one Lego Duplo 4x2 brick (subassembly 1). Source: University of Maribor

We designed an application in which a person and a cobot work together to assemble a finished product. The finished product consists of two Lego Duplo 2x2 bricks (subassembly 2) and one Lego Duplo 4x2 brick (subassembly 1).

At the beginning of the assembly operation, the cobot picks up subassembly 1 and moves it to the assembly point. Meanwhile, the person prepares two of subassembly 2, one green and one yellow. The person has to grasp the green brick with the left hand and the yellow brick with the right hand. The green brick must be attached to the lower part of subassembly 1, and the yellow brick to the upper part of subassembly 1.

When the cobot arrives at the assembly point, the green signal light turns on, indicating that the person should attach each subassembly 2. After the assembly is completed, the person confirms the continuation of the process by pressing the button. The green light turns off, and the red light turns on. The red light prohibits the person from reaching into the cobot’s workspace and their shared assembly area.

The cobot then carries the finished product to a buffer of finished products, and the process repeats. The assembly operation continues until the person and the cobot have assembled a certain number of products.

Looking for a reprint of this article?

From high-res PDFs to custom plaques, order your copy today!

Experiment Design

The aim of the experiment was to investigate how varying the sound and activity of the cobot would affect a person in a collaborative workstation. To do this, we divided the experiment into two scenarios.

The first scenario consisted of a basic workstation. In the second scenario, we added a barrier to the workstation. The barrier prevented visual contact between the person and the cobot, making it impossible for the person to know the cobot’s movement and speed. By changing the layout and splitting the experiment into two scenarios, we aimed to investigate how the ability to see the movement of the cobot might affect the worker’s efficiency. We defined efficiency as the time needed by a worker to conduct the collaborative assembly operation.

The researchers created a collaborative workstation in a laboratory. The workstation consisted of a UR3e cobot; a collaborative gripper; a worktable; a switch with green and red signal lights; and a signaling button. Source: University of Maribor

To study the impact of cobot sound and motion even further, we subdivided the experiment. Each scenario was divided into nine subsets—nine combinations of cobot speed, acceleration and sound levels. The combinations included three levels of cobot motion (60, 80 and 100 percent) and three levels of prerecorded sounds.

To study the effects of different sound levels on efficiency, it was necessary to simulate different sounds throughout the experiment. To achieve this, the sounds of cobot movements at previously defined motion parameters were recorded before the start of the experiment. To prevent environmental distractions, the participants wore noise-canceling headphones for the duration of the experiment. The prerecorded sounds were played through the headphones.

During the experiment, participants were not informed of any changes in the cobot’s motion parameters or the sounds they heard, nor whether the sound they heard corresponded to the actual movements of the cobot. The combinations of motion parameters and sound levels were chosen randomly to prevent the appearance of learning effects or fatigue in participants.

Fourteen people took part in the experiment—seven women and seven men—with an average age of 38 years. Prior to the experiment, the participants were only familiarized with the assembly procedure. They were not informed about the different combinations of motion parameters and sound levels of the cobot. The participants completed the first scenario (nine factor combinations at the collaborative workstation without the barrier) and then the second scenario (nine factor combinations at the collaborative workstation separated by the barrier). Each subset ended after 30 finished products had been assembled. The participants’ assembly times within each combination and their responses to a set of research questions constitute our output data for the experiment.

Experiment Results

In the first scenario, in which participants worked together with the cobot without the barrier, 72 percent of participants achieved the shortest average assembly time when the cobot motion parameters were higher than the sound level; 21 percent achieved it when the motion parameters were equal to the sound level; and 7 percent achieved it when the sound level was higher than the motion parameters.

With the barrier in place, only 50 percent of participants achieved the shortest average assembly time when the cobot motion parameters were higher than the sound level. The percentage of participants who achieved the shortest average assembly time when motion parameters and sound levels were equal remained the same at 21 percent, while the percentage of participants who achieved the shortest assembly time at higher sound levels and lower motion parameters increased to 29 percent.

In the second scenario, the decrease in the percentage of participants who achieved the shortest average assembly time at higher motion parameters and lower sound level, along with the increase in the percentage of participants who achieved the shortest time with higher sound level and lower cobot motion parameters, indicates that sound might affect workers to a certain degree. Further analysis would be needed.

The average assembly time without the barrier was 2.86 seconds. When the barrier was introduced, the average assembly time decreased to 2.74 seconds, a 4.2 percent reduction. From our preliminary results, we can assume that the barrier plays a role in workers’ performance. When workers are unable to track the cobot’s movement and rely less on visual cues, they might focus more intensely on the sound of the cobot’s movements.

The researchers created two experimental scenarios. The first consisted of a basic workstation. In the second, they added a barrier that prevented visual contact between the person and the cobot, making it impossible for the person to know the cobot’s movement and speed. Source: University of Maribor

Besides the objective data of assembly times, we also wanted subjective data from workers about our two scenarios. Which setup did they prefer?

After each combination, participants had to answer a question on a four-level scale and assess their ability to perform the work under the current conditions. After the experiment, they also had to indicate which scenario was more suitable: with the barrier or without it.

Nine of the 14 participants (64 percent) thought the workstation without the barrier was more suitable, while five preferred the workstation with the barrier. The participants who preferred the workstation without a barrier justified their decision with the possibility to observe the cobot’s movement and to be aware of its position. Those who chose the workstation with the barrier justified their decision mainly on the safety aspect and the feeling of security.

To support the results regarding the preferred scenario, we offered participants four possible responses to assess their comfort and ability to collaborate with the cobot under the defined motion parameters and sound levels. After each combination, the participants rated it with one of four options: impossible, difficult, appropriate, and great. For each scenario, we obtained 126 responses (14 participants times nine responses).

Participants were asked to rate each combination of variables in each scenario. For scenario 1, without a barrier, the researchers received 69 responses for “great” and 57 responses for “appropriate.” For scenario 2, with the barrier, the number of “great” responses decreased to 51, while the number of “appropriate” responses increased to 71. Source: University of Maribor

For scenario 1, without a barrier, we received 69 responses for “great” and 57 responses for “appropriate.” We received no responses for “difficult” or “impossible.” For scenario 2, with the barrier,

the number of “great” responses decreased to 51, while the number of “appropriate” responses increased to 71. We received four “difficult” responses and no “impossible” responses.

Clearly, workers preferred the scenario without the barrier to the one with, indicating that the ability to see the robot plays an important role in human-robot collaboration.

Discussion

The aim of our study was to determine how motion, sound, and visual contact between the worker and the cobot would affect worker efficiency, or in our case, average assembly time.

In terms of motion, we found that the percentage of participants with the lowest assembly time was the highest for both scenarios when motion parameters (or, plainly, speed) exceeded sound levels. This would indicate that sufficiently high speeds lead to decreased assembly times. This is not surprising. Faster motion means shorter assembly times and increased efficiency.

As for the other two variables, our initial results indicated that visual contact between the cobot and the worker might have some impact on assembly times, but nothing could be concluded about the impact of sound levels. More advanced statistical analysis discovered that visual contact and sound level, by themselves, had no effect on average assembly time. However, together, they did have an effect.

In the first scenario, in which participants worked together with the cobot without a barrier, 72 percent of participants achieved the shortest average assembly time when the cobot motion parameters were higher than the sound level. With the barrier in place, only 50 percent of participants achieved the shortest average assembly time. Source: University of Maribor

In short, increasing the speed and acceleration of the cobot is beneficial for efficiency, as long as the perceived sound level (loudness) is lower than the actual motion of the cobot. For example, when the cobot was operating at 100 percent, but the sound was set to the lowest level, the mean assembly time was the lowest out of all combinations in our study.

This was true regardless of whether or not participants could see the cobot. When participants are not aware of the cobot’s movements and believe that the cobot is moving slowly due to lower sound levels, their assembly times are shorter and efficiency is increased.

Subjectively, workers much prefer workstations in which they have visual contact with the cobot, even if, objectively, they achieved lower average assembly times with the barrier in place. It seems that under those conditions, they elevated their performance, but felt uncomfortable.

Of course, our study was done in a laboratory with a limited number of participants. To gain a true understanding of human-robot collaboration, more work needs to be done on actual assembly lines under real-world conditions.

Editor’s note: This article is a summary of a research paper co-authored by Aljaz Javernik, technical associate; Klemen Kovic, researcher; and Robert Ojsteršek, Ph.D., associate professor. To read that paper, click here.

ASSEMBLY ONLINE

For more information on collaborative robots, read these articles:

How to Effectively Deploy Cobots

Danfoss Automates Valve Assembly With Cobots

Cobots Can Weld

.jpg?height=300&t=1743848480&width=300)