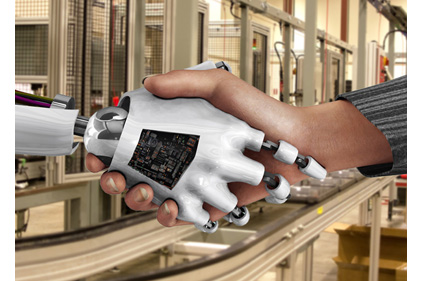

Human Arm Sensors Make Robots Smarter

Engineers at the Georgia Institute of Technology have developed a way to allow humans and robots to interact safely. The control system uses arm sensors that can “read” a person’s muscle movements. The sensors send information to a robot, allowing it to anticipate a human’s movements and correct its own.

“The problem is that a person’s muscle stiffness is never constant, and a robot doesn’t always know how to correctly react,” says Jun Ueda, a professor in the Woodruff School of Mechanical Engineering at Georgia Tech.

“The robot becomes confused,” explains Ueda. “It doesn’t know whether the force is purely another command that should be amplified or ‘bounced’ force due to muscle co-contraction. The robot reacts regardless.

“The robot responds to that bounced force, creating vibration,” adds Ueda. “Human operators also react, creating more force by stiffening their arms. The situation and vibrations become worse.”

The Georgia Tech system eliminates the vibrations by using sensors worn on a human’s forearm. The devices send muscle movements to a computer, which provides the robot with the operator’s level of muscle contraction.

“The system judges the operator's physical status and intelligently adjusts how it should interact with the human,” says Ueda. “The result is a robot that moves easily and safely.

“Instead of having the robot react to a human, we give it more information,” adds Ueda. “Modeling the operator in this way allows the robot to actively adjust to changes in the way the operator moves.

“Future robots must be able to understand people better,” Ueda points out. “By making robots smarter, we can make them safer and more efficient.”

Ueda believes the “adaptive shared control approach for advanced manufacturing and process design” will have numerous applications, including automotive, aerospace and military.

Looking for a reprint of this article?

From high-res PDFs to custom plaques, order your copy today!